Gunicorn is one of the most popular and vetted WSGI servers used with Python HTTP applications. We'll learn about some of its most important configuration options with a mind towards performance, starting with the default worker class, sync.

Gunicorn always runs one master process and one or more worker processes. When we run Gunicorn with a given worker class, we're selecting how the worker processes will function. In the case of sync, each worker process has one thread and handles requests one at a time on that thread.

Getting Started

Here is a very simple WSGI application that either does ~1 second worth of IO-bound or CPU-bound work depending on environment variables. We'll use this app to measure the performance of sync workers.

# wsgi.py

import os

import time

import psycopg2

# Choose if our work is IO bound or CPU bound, or a mix of the two

io_percentage = int(os.getenv("IO_BOUND", 0))

cpu_percentage = int(os.getenv("CPU_BOUND", 0))

if io_percentage + cpu_percentage != 100:

raise ValueError(io_percentage, cpu_percentage)

def application(environ, start_response):

start = time.time()

if io_percentage:

sleep_seconds = float(io_percentage) / 100.0

# Connect to a local database for IO bound work

db_connection = psycopg2.connect(

host="127.0.0.1",

port="5432",

dbname="postgres",

user="postgres",

password="password",

)

db_cursor = db_connection.cursor()

db_cursor.execute(f"SELECT pg_sleep({sleep_seconds})")

if cpu_percentage:

# max_iterations is tuned to burn up 1 second on my machine

# when there is no contention for resources.

max_iterations = (float(cpu_percentage) / 100.0) * 5000000

iterations_done = 0

work = 15

while iterations_done < max_iterations:

# do arbitrary math to utilize the CPU

work = (work + 7 + work/2) % 1000

iterations_done += 1

duration = time.time() - start

print(f"IO work: {io_percentage}, CPU work: {cpu_percentage}, time: {duration:.1f} seconds")

start_response("204 No Content", [])

return [b""]

Let's run the app with Gunicorn's sync worker, then give it some load using hey, a simple load generator.

$ # Run one sync worker process, 100% IO-bound work, listening on port 8000

$ IO_BOUND=100 gunicorn --bind=127.0.0.1:8000 --workers=1 --worker-class=sync wsgi:application

[2021-01-18 16:18:37 -0500] [13085] [INFO] Starting gunicorn 20.0.4

[2021-01-18 16:18:37 -0500] [13085] [INFO] Listening at: http://127.0.0.1:8000 (13085)

[2021-01-18 16:18:37 -0500] [13085] [INFO] Using worker: sync

[2021-01-18 16:18:37 -0500] [13088] [INFO] Booting worker with pid: 13088

$ # in another terminal

$ # Make 10 requests, 10 at a time, with no timeout, to port 8000

$ hey -n 10 -c 10 -t 0 http://127.0.0.1:8000

Summary:

Total: 10.1290 secs

Slowest: 10.1275 secs

Fastest: 1.0094 secs

Average: 5.5698 secs

Requests/sec: 0.9873

Response time histogram:

1.009 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

1.921 [0] |

2.833 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

3.745 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

4.657 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

5.568 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

6.480 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

7.392 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

8.304 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

9.216 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

10.127 [1] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

Here we see that those 10 requests were served one-at-a-time. Since each request includes 1 second of IO-bound work, we can only handle 1 request/second. Also, since there is no concurrency taking place, the slowest response took 10 seconds because it had to wait for all the other requests to complete. This exactly matches Gunicorn's description of the sync worker:

The most basic and the default worker type is a synchronous worker class that handles a single request at a time.

Let's see what happens as we use more workers, still with an IO-bound workload.

$ # 5 IO-bound worker processes

$ IO_BOUND=100 gunicorn --bind=127.0.0.1:8000 --workers=5 --worker-class=sync wsgi:application

[2021-01-18 16:27:59 -0500] [13232] [INFO] Starting gunicorn 20.0.4

[2021-01-18 16:27:59 -0500] [13232] [INFO] Listening at: http://127.0.0.1:8000 (13232)

[2021-01-18 16:27:59 -0500] [13232] [INFO] Using worker: sync

[2021-01-18 16:27:59 -0500] [13235] [INFO] Booting worker with pid: 13235

[2021-01-18 16:27:59 -0500] [13237] [INFO] Booting worker with pid: 13237

[2021-01-18 16:27:59 -0500] [13238] [INFO] Booting worker with pid: 13238

[2021-01-18 16:27:59 -0500] [13239] [INFO] Booting worker with pid: 13239

[2021-01-18 16:27:59 -0500] [13240] [INFO] Booting worker with pid: 13240

$ # 30 simultaneous requests

$ hey -n 30 -c 30 -t 0 http://127.0.0.1:8000

Summary:

Total: 6.1011 secs

Slowest: 6.0951 secs

Fastest: 1.0144 secs

Average: 3.5539 secs

Requests/sec: 4.9171

Response time histogram:

1.014 [1] |■■■■■■■■

1.522 [4] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

2.031 [2] |■■■■■■■■■■■■■■■■

2.539 [3] |■■■■■■■■■■■■■■■■■■■■■■■■

3.047 [3] |■■■■■■■■■■■■■■■■■■■■■■■■

3.555 [2] |■■■■■■■■■■■■■■■■

4.063 [3] |■■■■■■■■■■■■■■■■■■■■■■■■

4.571 [2] |■■■■■■■■■■■■■■■■

5.079 [1] |■■■■■■■■

5.587 [4] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

6.095 [5] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

No surprise here. We have 5 processes and each one takes 1 second to handle a request. We can handle 5 requests/second and the slowest response took 6 seconds.

It seems that we can infinitely throw more workers at this workload to achieve more throughput as needed. This is true except that eventually we would run out of memory. This tiny application uses only 9 MB for each process (PSS is the best measure of this):

$ smem -t -k --processfilter="wsgi:application"

PID User Command Swap USS PSS RSS

14228 joel /home/joel/.virtualenvs/gun 0 5.0M 7.2M 22.5M

14235 joel /home/joel/.virtualenvs/gun 0 6.7M 9.0M 25.4M

14232 joel /home/joel/.virtualenvs/gun 0 6.8M 9.0M 25.4M

14231 joel /home/joel/.virtualenvs/gun 0 6.8M 9.0M 25.4M

14233 joel /home/joel/.virtualenvs/gun 0 6.8M 9.0M 25.4M

14234 joel /home/joel/.virtualenvs/gun 0 6.8M 9.0M 25.4M

But, for example, the Django app that generates this static site uses on the order of 100 MB in its single process running the Django development server. On a cheap 1 GB/1 CPU machine, you don't want more than 4-5 such worker processes. Now maybe we can understand Gunicorn's recommendation to run (2 x $num_cores) + 1 workers as a starting point.

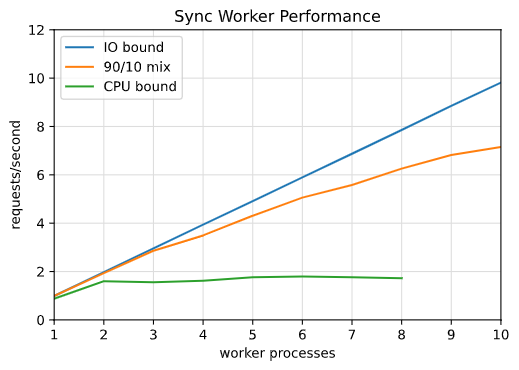

Charting Performance

Here we've done the above load testing for three different workloads: pure IO, pure CPU, and a 90% IO/10% CPU blend. Each workload is benchmarked with 1 to 10 workers.

For the pure IO workload, each request consumes very little CPU as most of the time is spent waiting on PostgreSQL. The system is very underutilized, and our throughput increases as we use more worker processes. A realistic example of this workload might be a background worker making an HTTP request to charge a credit card with Stripe, send an SMS with Twilio, or send an email with Mailgun.

For the pure CPU workload, each request occupies one core completely. Because my machine has four cores, the system is completely utilized with four worker processes. Surprisingly, adding more than two worker processes does not improve throughput at all. A realistic example of this workload might be image processing or computer vision. Below, a CPU monitor showing one, two, three, and four cores being completely utilized as the same number of worker processes are used with the CPU-bound workload.

Finally, the blended workload falls in between the other two. Even a 10% CPU-bound contribution sharply reduces throughput.

Takeaways

One important thing to take away is that any CPU-bound work should not be done in Python if throughput is important. For example, image processing can be done in very performant C from a Python program using the Pillow library. The Python ecosystem has libraries that call into C code for most CPU-bound tasks you will encounter. Even if using the sync worker is the best way to do compute-intensive tasks in Gunicorn, this measurement suggests it may not be able to make productive use of all cores simultaneously.

Another important takeaway is that long requests tie up sync workers and reduce throughput. As the Gunicorn docs state, this means workers that make requests to 3rd parties should not use the sync worker class:

The default synchronous workers assume that your application is resource-bound.... Generally this means that your application shouldn’t do anything that takes an undefined amount of time. An example of something that takes an undefined amount of time is a request to the internet. At some point the external network will fail in such a way that clients will pile up on your servers.... any web application which makes outgoing requests to APIs will benefit from an asynchronous worker.

In my opinion this contradicts what they write elsewhere:

The default class (sync) should handle most “normal” types of workloads.

Isn't making requests to other servers part of a "normal" web application workload? In any case, sync workers should only be used for very fast responses and reasonable memory footprints if throughput is important. If low throughput becomes a problem due to slow 3rd party requests, these requests can be run outside of the WSGI request-response lifecycle, perhaps by using a Celery task queue. Then the response to the client can be fast and background workers can wait on the slow network calls.