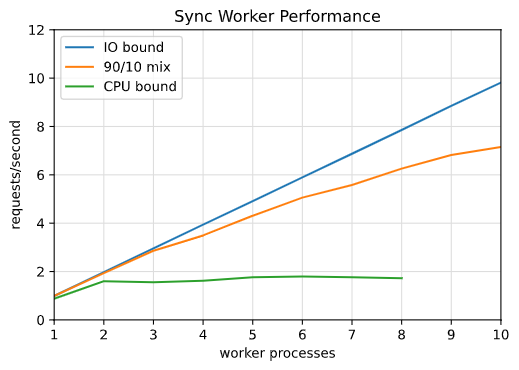

Previously we considered gunicorn's sync workers and found that throughput of IO-bound workloads could be increased only by spawning more worker processes (or threads, in the case of the gthread worker class). In situations where this approach is too resource inefficient or where 3rd party request latencies are unacceptable, we may instead use an event loop based async worker like the gevent class.

To recap, we found that throughput with sync workers is mathematically limited because each worker processes one request at a time. To handle requests with 1 second of IO-bound work, we needed N worker processes to serve N requests per second.

One way to decouple consumption of system resources (processes, threads, and memory) from throughput is with an event loop. An event loop allows one main routine to run work defined by other routines, interleaving them to provide asynchronicity and concurrency. Since the routines are generally not each run in their own thread or process, this reduces resource use.

Thankfully you do not need to implement this design yourself to enjoy its benefits. Several libraries in the Python ecosystem implement this for you. Today we'll use one very popular library, gevent.

Monkeypatching

Since the routines used in gevent are cooperative, you will only see the advantages from gevent if your code participates in the event loop. Instead of running ahead in a synchronous way, your code must yield back to the main routine when it's about to do something long and blocking, like open a socket to query a database or make an HTTP request. Gevent makes this easy by exposing utilities that monkeypatch blocking calls like time.sleep() and socket.create_connection().

To illustrate the concept, let's monkeypatch print()

>>> print("Hello, monkeypatching")

Hello, monkeypatching

>>> builtin_print = print

>>> def new_print(value, *args, **kwargs):

... builtin_print(value.upper(), *args, **kwargs)

...

>>> print("Hello, monkeypatching")

Hello, monkeypatching

>>> print = new_print

>>> print("Hello, monkeypatching")

HELLO, MONKEYPATCHING

It's as easy as overwriting the function or object with one that behaves how you want it to. When you run from gevent import monkey; monkey.patch_all(), gevent monkeypatches enough of the standard library to make your code and its dependencies gevent-compatible.

Gunicorn and gevent

Consider the following simple WSGI application that makes a 1 second database query for each request.

# wsgi_async.py

import psycopg2

def application(environ, start_response):

# Connect to a local database for IO bound work

db_connection = psycopg2.connect(

host="127.0.0.1",

port="5432",

dbname="postgres",

user="postgres",

password="password",

)

db_cursor = db_connection.cursor()

db_cursor.execute("SELECT pg_sleep(1.0)")

db_cursor.close()

db_connection.close()

print("handled request")

start_response("204 No Content", [])

return [b""]

Run it with the gevent gunicorn worker class, and make 10 requests to it:

$ gunicorn --bind=127.0.0.1:8000 --workers=1 --worker-class=gevent --worker-connections=1000 wsgi_async:application

[2021-01-26 13:42:33 -0500] [4098] [INFO] Starting gunicorn 20.0.4

[2021-01-26 13:42:33 -0500] [4098] [INFO] Listening at: http://127.0.0.1:8000 (4098)

[2021-01-26 13:42:33 -0500] [4098] [INFO] Using worker: gevent

[2021-01-26 13:42:33 -0500] [4101] [INFO] Booting worker with pid: 4101

$ # in another terminal, send 10 requests

$ hey -n 10 -c 10 -t 0 http://127.0.0.1:8000

Summary:

Total: 10.0905 secs

...

Requests/sec: 0.9910

The requests were still handled one at a time for a terrible throughput of one request per second, even though gunicorn is smart enough to run gevent's monkeypatching for us. What gives?

Psycopg, the popular Python PostgreSQL library, explains:

Coroutine-based libraries (such as Eventlet or gevent) can usually patch the Python standard library in order to enable a coroutine switch in the presence of blocking I/O..... Because Psycopg is a C extension module, it is not possible for coroutine libraries to patch it

We have to add an extra monkeypatch from psycogreen:

# wsgi_async.py

import psycogreen.gevent

psycogreen.gevent.patch_psycopg()

import psycopg2

def application(environ, start_response):

# ...

This takes advantage of some hooks exposed in psycopg to support coroutines.

$ hey -n 10 -c 10 -t 0 http://127.0.0.1:8000

Summary:

Total: 1.0318 secs

...

Requests/sec: 9.6920

The test shows that 10 requests were handled simultaneously. We now can serve around 10 requests/sec. How high can we go?

$ # 50 simultaneous requests

$ hey -n 50 -c 50 -t 0 http://127.0.0.1:8000 | grep -E -e "(Requests\/sec|responses)"

Requests/sec: 45.7163

[204] 50 responses

$ # 75 simultaneous requests

$ hey -n 75 -c 75 -t 0 http://127.0.0.1:8000 | grep -E -e "(Requests\/sec|responses)"

Requests/sec: 64.6615

[204] 75 responses

$ # 100 simultaneous requests

$ hey -n 100 -c 100 -t 0 http://127.0.0.1:8000 | grep -E -e "(Requests\/sec|responses)"

Requests/sec: 83.9704

[204] 100 responses

$ # above 100 simultaneous requests we get errors

$ # 101 simultaneous requests

$ hey -n 101 -c 101 -t 0 http://127.0.0.1:8000 | grep -E -e "(Requests\/sec|responses)"

Requests/sec: 83.7357

[204] 100 responses

[500] 1 responses

$ # 125 simultaneous requests

$ hey -n 125 -c 125 -t 0 http://127.0.0.1:8000 | grep -E -e "(Requests\/sec|responses)"

Requests/sec: 98.2715

[204] 100 responses

[500] 25 responses

The requests/sec measurement increases as we send more simultaneous requests; we are no longer limited by the Python application. Instead, we are limited by the number of client connections allowed by PostgreSQL, 100 by default.

Tuning Postgres is a topic for another time. If we stay under this connection limit with --worker-connections=100 and use a more realistic query, how much throughput do we gain?

# wsgi_async.py

import psycogreen.gevent

psycogreen.gevent.patch_psycopg()

import psycopg2

def application(environ, start_response):

# Connect to a local database for IO bound work

db_connection = psycopg2.connect(

host="127.0.0.1",

port="5432",

dbname="dvdrental",

user="postgres",

password="password",

)

db_cursor = db_connection.cursor()

db_cursor.execute("""

SELECT a.first_name, a.last_name

FROM actor AS a

LIMIT 1000;

""")

results = db_cursor.fetchall()

print(f"{len(results)} rows fetched")

db_cursor.close()

db_connection.close()

print("handled request")

start_response("204 No Content", [])

return [b""]

$ gunicorn --bind=127.0.0.1:8000 --workers=4 --worker-class=gevent --worker-connections=100 wsgi_async:application

$ hey -n 1000 -c 1000 -t 0 http://127.0.0.1:8000 | grep -E -e "(Requests\/sec|responses)"

Requests/sec: 197.7297

[204] 1000 responses

200 requests/sec technically matches the "hundreds or thousands of requests per second" advertised by gunicorn, but surely we can do better.

Gunicorn should only need 4-12 worker processes to handle hundreds or thousands of requests per second.

Keep in mind that I'm running Postgres basically as docker run postgres, which will use default settings with no eye towards tuning. At the very least, I could raise the connection limit to increase throughput. On the Python side we can pool database connections instead of creating and destroying them in each request. Even better: since we're running the same query over and over, we could cache the results and completely avoid hitting the database.

The async worker did its job of removing gunicorn as the main bottleneck. This opens up a universe of more specific next steps depending on your exact situation.